- Finding a solution using a solver is rather quick.

- This approach gives an optimal solution, while my heuristic gives a suboptimal solution (for workload model 2). But the question is whether we can describe the model as realistic here.

- In this paper they manage to determine a solution while there is uncertainty of demand and prices. The workload demand at a certain moment is a probabilistic given, and the on-demand price will change during the simulation with a certain probability.

So, I read the paper again and tried to fully understand the GAMS source code. An overview of this analysis follows here. The source code of the file we'll be discussing can be downloaded here. Found a webpage from where this script can be committed as a job to the Neos server here. The first remarkable thing is that only 3 types of tasks/VM classes were used in the simulation with the following resource demand.

To make it more realistic there should be more possible tasks with a probability distribution specifying which tasks should be used more/less often (to get a real life load model).

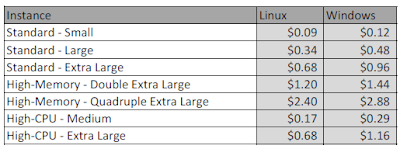

And 4 instance types were offered (from different cloud providers) with their characteristics specified as in the following table. Since we would use this to schedule tasks and determine an optimal division between reserved and on-demand instances we probably only need one instance type here (the one on which we'll schedule the given tasks).

The resource provisioning happens in three phases:

- Reservation of a number of resources.

- Determine the actual usage of these resources.

- Determine the on-demand resources (which will be all the resources that are still needed after provisioning the reserved resources).

To make the model more simple, we could eliminate some of these costs (and for example only keep the CPU-time costs, since this one is the one that really matters in my research). This will make finding the solution quicker and possibly allow us to extend this model to a lot of different tasks and a longer period of time. Note that the period in which the VMs are scheduled at the moment is a maximum of 1200 hours which equals 50 days. It would become interesting when this could be extended to a year (which for Amazon equals 8736 hours). You could find it strange that different physical machines with the same characteristics have different prices (for example the reserved price of machine 1 vs machine 2), but this is possible since in this model they represent instances from different cloud providers.

The uncertainty about the price can be found in the parameters that depend on the M set, we notice that with a probability of 70 percents the price stays the same and there is 30 percents chance that the on-demand price increases. It does not increase a little, it is multiplied by 3. The following image illustrates this. This does not seem to be realistic, you can see in my previous blogpost that for EC2 this chance is a lot smaller and the price actually decreases. Here we use it as penalty, to represent the change in price difference between on-demand and reserved resources. We noticed however that they change about the same amount and changes are immediate for both kinds of resources. So bringing this into account is probably not realistic, it would be better to bring a price decrease for both on-demand and reserved instances into account.

In this document the different elements that make up the formulas for the objective (total cost) and the constraints will be explained.

Finally I tried to adapt the GAMS source file in such a way that I would optimize (find the solution with minimal cost) the problem that tasks distributed by the given function were scheduled over a number of instances with the same characteristics. Such that an instance only became reserved when enough of its hours are used during the time period of one year. Prices could change as well in this model (different probability for on-demand as for reserved resources to decrease their price), but only the price of CPU-time would be taken into account. I however have still trouble to get the constraint in there for an instance to only be reserved when it is loaded enough. The current GAMS file can be downloaded here.

A good guide to using GAMS can be found here by the way.